Introduction

Want Google to find your best pages faster and waste less time on junk URLs? Tweaking your robots.txt SEO settings and building a focused XML sitemap helps search engines crawl efficiently and index more of your important pages. This short guide walks you through creating a lean sitemap, writing a clear robots.txt, fixing crawl errors and using noindex and canonical tags. You can implement these steps this week and track the results in Search Console.

Creating and Testing an XML Sitemap

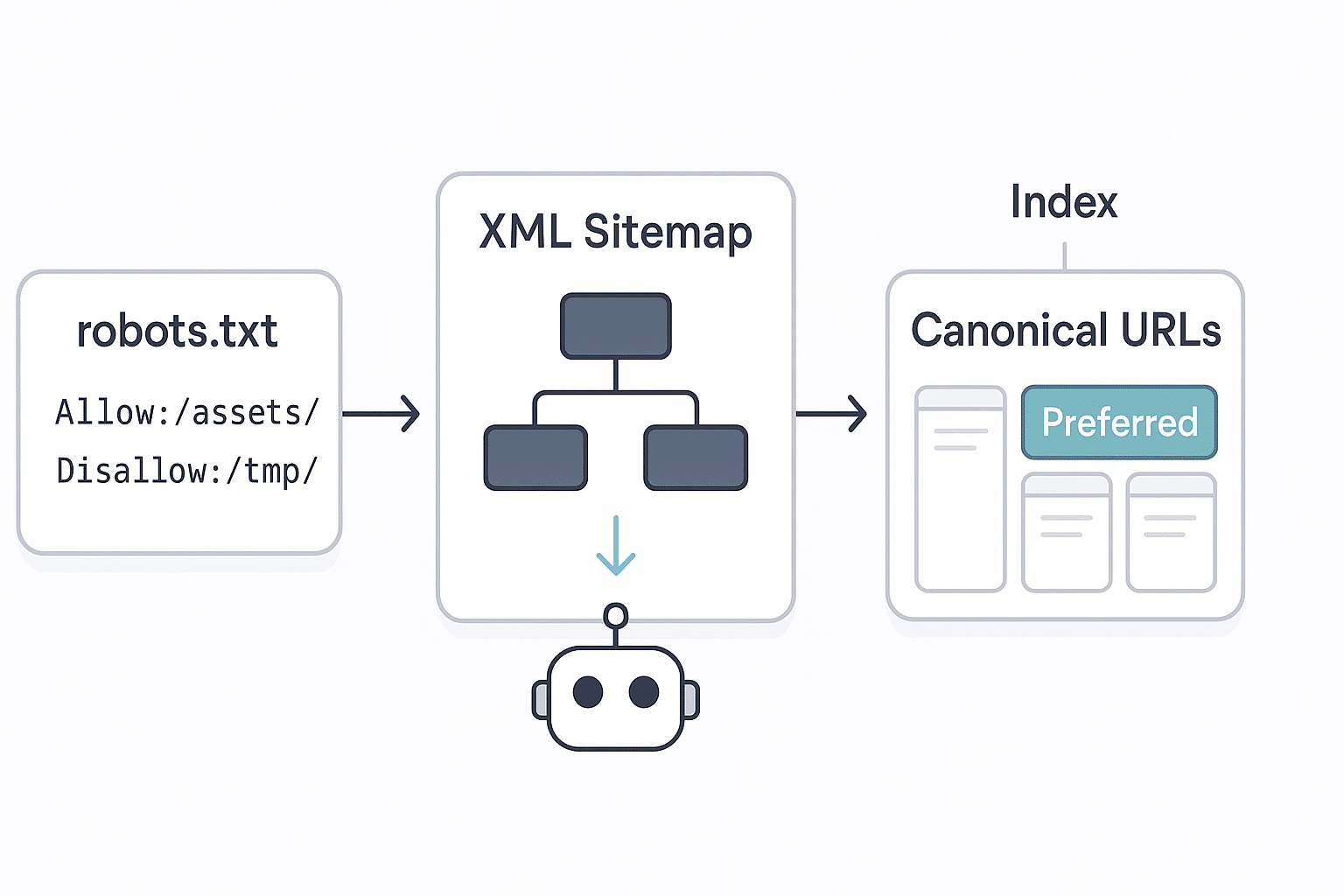

An XML sitemap lists the canonical URLs you want crawlers to discover. Include only pages you care about, not duplicates or parameter‑stuffed URLs. Use the optional <lastmod> tag when you make significant changes; Google uses it when it’s trustworthy and ignores <priority> and <changefreq> values. For large sites you can split the file and create a sitemap index; smaller sites can use a simple list. See the XML sitemaps guide for detailed format tips.

Submit your sitemap by adding a Sitemap: directive in robots.txt or via the Sitemaps report in Search Console. After submission, monitor the report for errors and check that listed pages move from “Discovered” to “Indexed”. Keeping your sitemap fresh helps Google find new content quickly and saves crawl budget for the URLs that matter.

Writing Robots.txt for Optimal Crawling

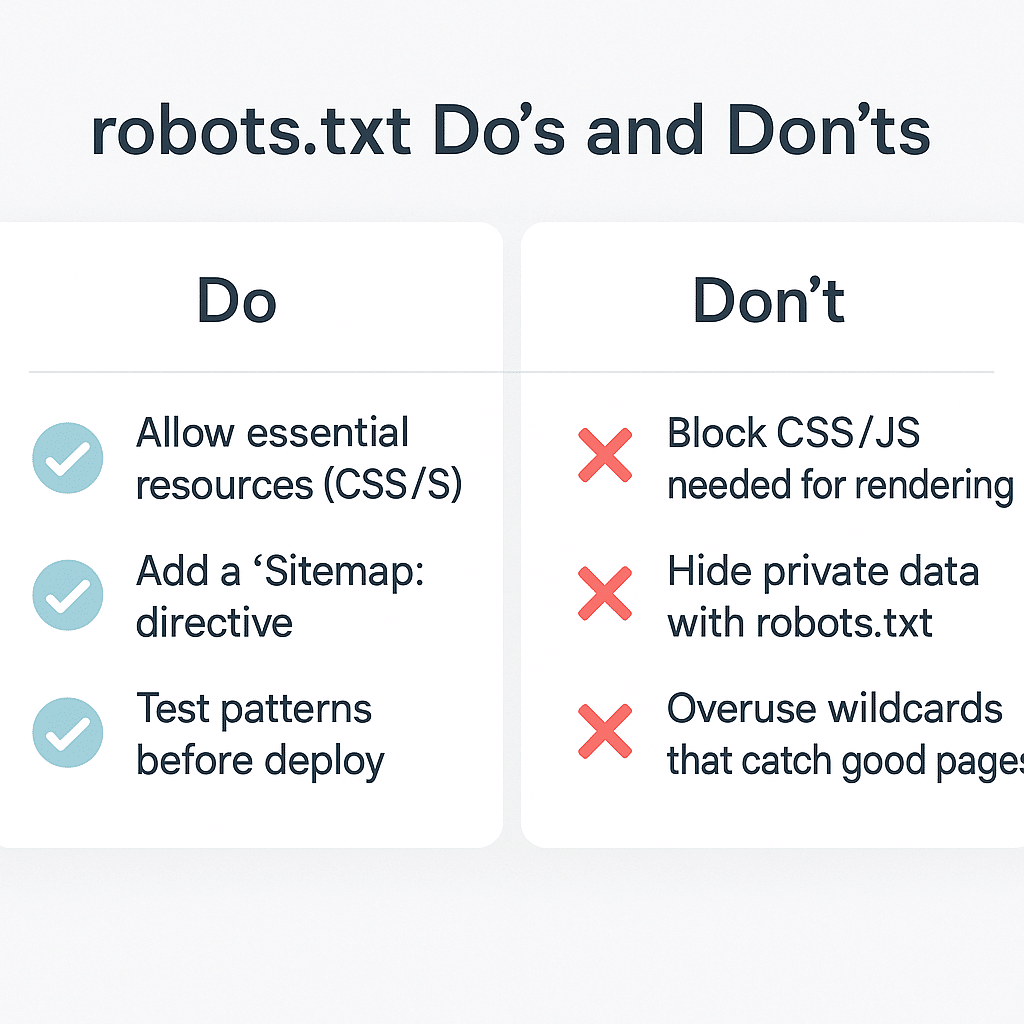

A robots.txt documentation file sets rules for crawlers. It begins with a User‑agent line followed by Disallow or Allow patterns. The first matching rule applies, and anything not disallowed is fair game. Use Disallow to block unimportant or duplicate paths, and Allow to open up a specific file inside a blocked section. You can also add Sitemap: lines to tell crawlers where your sitemap lives.

Keep the file simple. Block search results, endless faceted URLs and staging directories, but never block CSS or JavaScript essential for rendering. Robots.txt is not a security tool: disallowed pages can still be indexed if other sites link to them. For private content use noindex or password protection. After uploading the file to your domain root, test it with Search Console’s robots.txt report or an open‑source parser to confirm your patterns work as expected.

Fixing Crawl Errors in Google Search Console

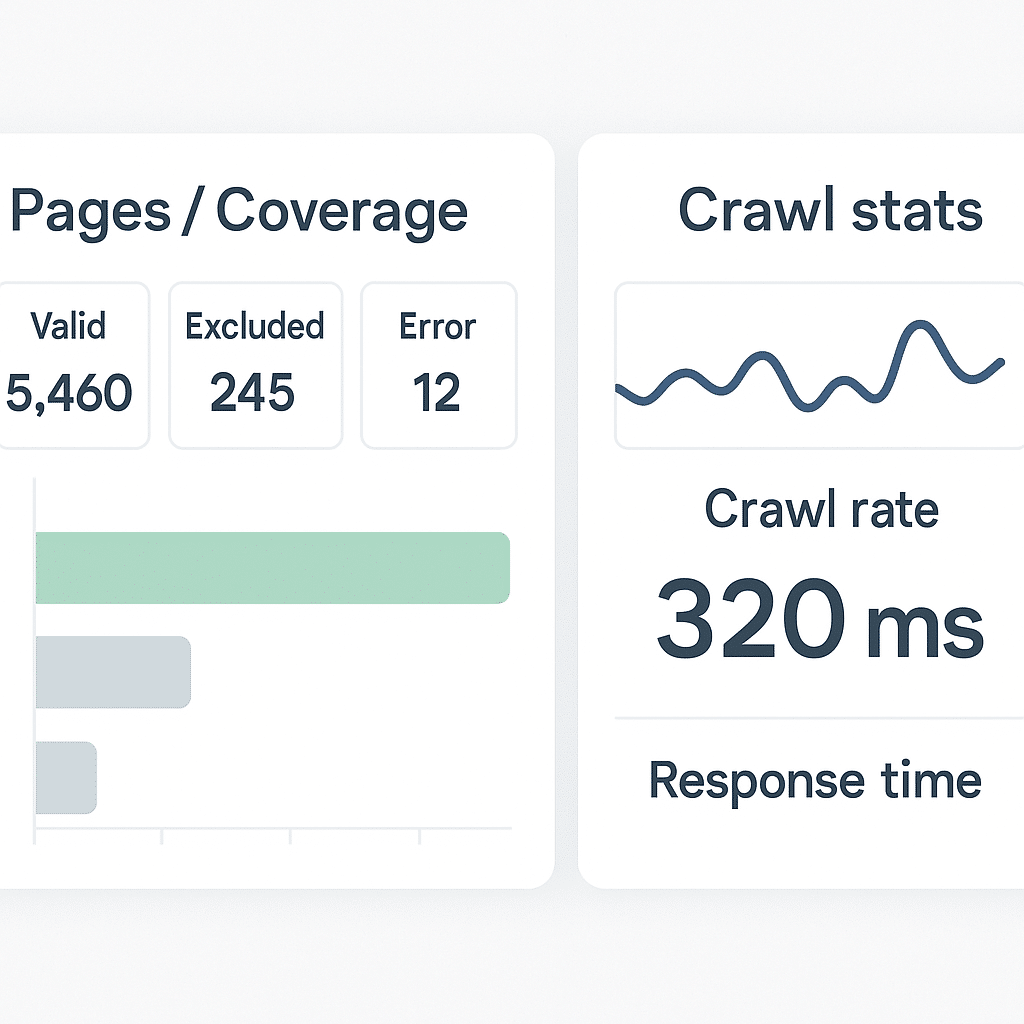

A healthy crawl profile means Google can access and index the pages that matter. Use the Pages/Coverage report to see which URLs are indexed, excluded or error‑ridden. The Crawl stats report shows whether your server is serving requests reliably. Slow responses or repeated 5xx errors reduce crawl capacity, so work with your developers to improve performance.

For page‑level diagnostics use the URL Inspection help. It reveals whether a page is indexed, blocked or returning soft 404, 404 or 410 errors. Fix broken links or remove dead pages, and return proper 404/410 status codes so Google drops them from the crawl queue. Consolidate duplicates with canonicals or redirects. Keeping your sitemap up to date and removing stale URLs also frees crawl budget. Check the Crawl stats regularly so you catch and fix issues quickly.

Using ‘noindex’ and Canonicals to Control Indexing

Not every page needs to appear in search. A noindex tag tells search engines to drop a page from results. Add <meta name=”robots” content=”noindex”> in the <head> or send an X‑Robots‑Tag: noindex header. Make sure the page isn’t blocked by robots.txt or Google won’t see the tag. Use noindex on thin thank‑you pages, temporary campaigns or internal tools.

A canonical tag signals your preferred URL when duplicate content exists. You can set it via a 301 redirect, a rel=”canonical” link element or by listing only the canonical version in your sitemap. Combining methods strengthens the signal. Canonicals consolidate link equity, improve tracking and prevent crawl budget waste. For more guidance see the canonical tags overview. Don’t block canonical pages with robots.txt and avoid conflicting signals by sticking to one canonical per page. Link consistently to canonical URLs across your site.

Conclusion / Next Steps

Clear crawl instructions pay dividends. Create a lean sitemap of valuable canonical URLs, add accurate lastmod dates and submit it. Write a tidy robots.txt that blocks traps but leaves essential resources open. Use Search Console’s Pages, Crawl stats and URL Inspection tools to monitor performance and fix issues. Implement noindex and canonical tags wisely to control indexing. These steps help Google focus on your high‑value content and improve visibility and conversions.

Our SEO agency Sydney team can audit your crawl health and implement these recommendations for maximum ROI. For proof that these tactics work, explore our Case Studies. Track CTA clicks, lead form starts, submissions, time on page and scroll depth to measure how better crawlability translates to business outcomes.