Introduction

JavaScript powers modern websites, but search engines still prefer HTML. JavaScript SEO helps bots find, render and index your pages. In 2025 Google crawls your HTML first and only runs scripts later, so content hidden by JavaScript may be missed. This playbook, written for marketing leaders and product teams, explains how to optimise rendering and crawlability in plain English.

How Google Renders JavaScript Today

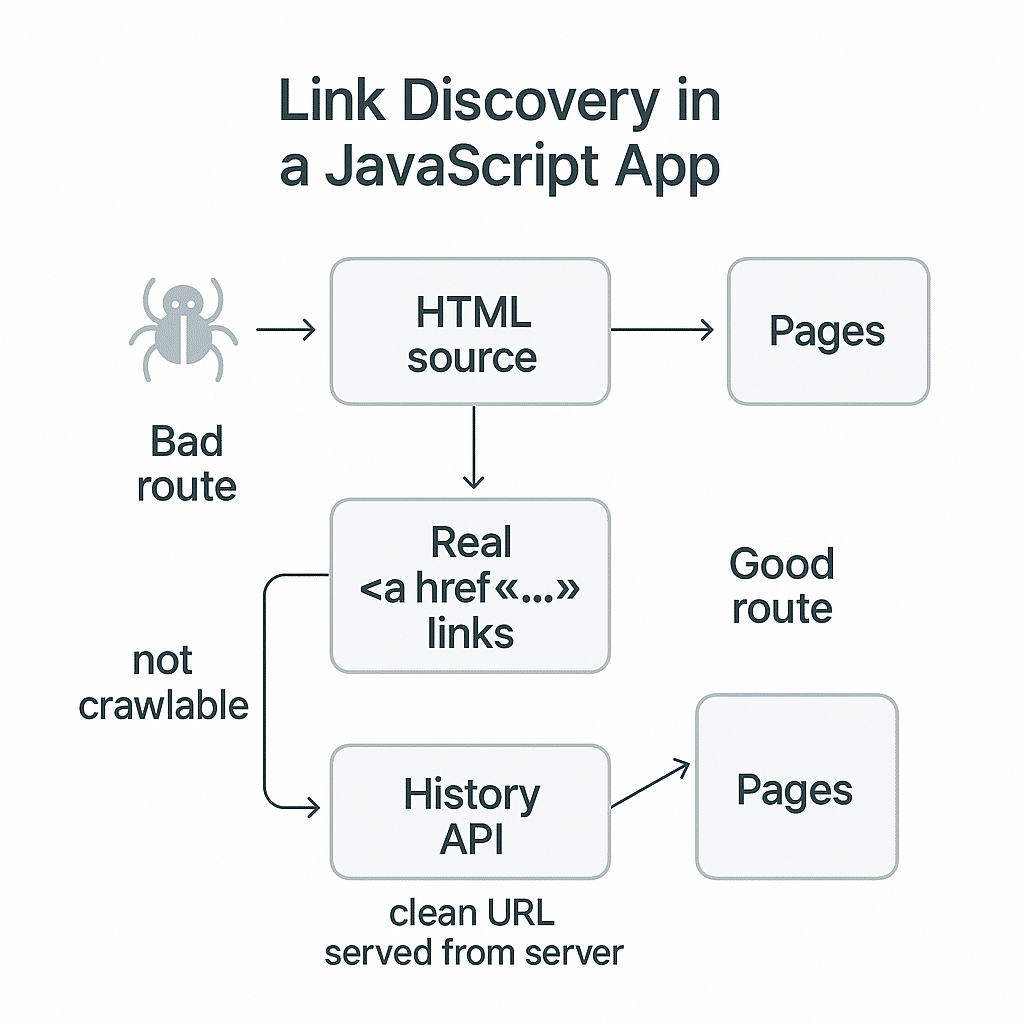

Google handles JavaScript pages in two phases. First Googlebot fetches the HTML and extracts anchor tags with real URLs. Anything in this source can be indexed immediately. Then the page enters a render queue. A headless Chrome runs your scripts to build the final page, and Google extracts more links and text from this rendered version. Rendering takes longer than crawling, so pages may wait in the queue. If your initial HTML is empty, indexing will be delayed or lost. To help bots, include key content and internal links in the HTML and avoid blocking scripts or APIs in robots.txt. Always return meaningful HTTP status codes. Google details this two‑phase process in its JavaScript SEO basics guide.

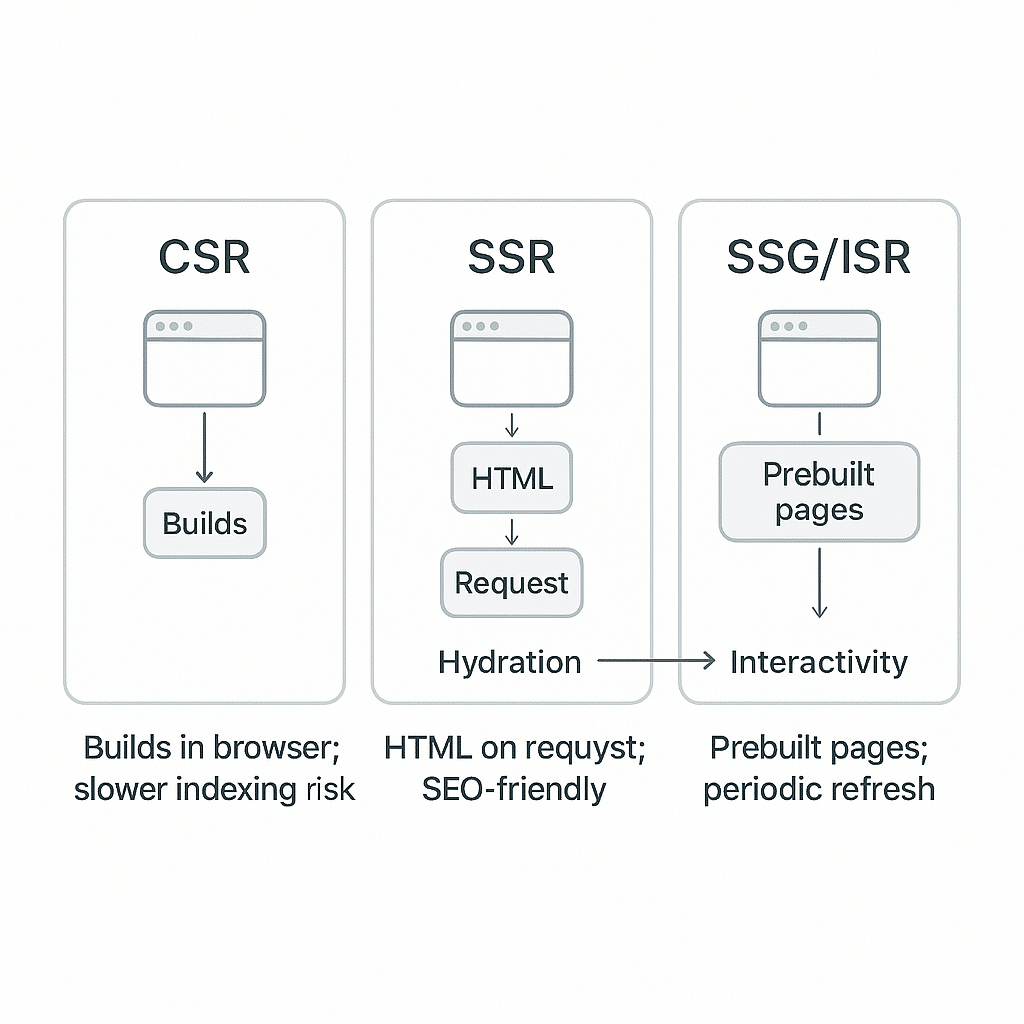

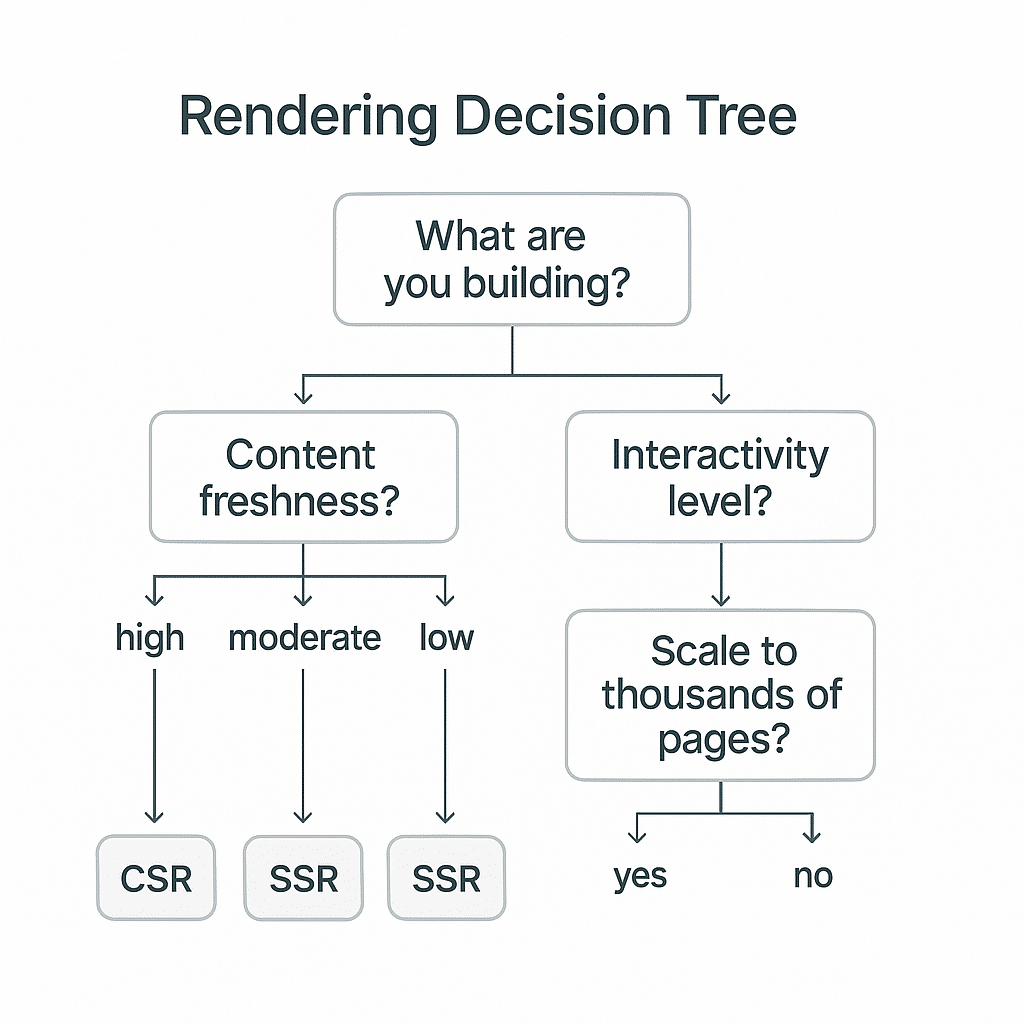

Rendering Models Explained (CSR, SSR, SSG/ISR) and Hydration

Client-side rendering (CSR) sends an almost empty page plus a JavaScript bundle that builds everything in the browser. It supports rich apps but slows indexing. Server-side rendering (SSR) produces full HTML on request; JavaScript then adds interactivity. SSR improves SEO and perceived speed but increases server work. Static site generation or incremental static regeneration (SSG/ISR) pre-builds pages into static files and refreshes them on a schedule. This yields fast loads and strong SEO for content that doesn’t change constantly. Hydration attaches event listeners to server-rendered HTML so it becomes interactive without rebuilding the markup. Use SSR or SSG/ISR for marketing pages and CSR for dashboards. When unsure, deliver meaningful HTML first. For an in‑depth explanation of these models, see Verkeer’s JavaScript SEO guide.

Crawlability: Make Content and Links Discoverable

Crawlability means bots can discover your pages via internal links. Use standard <a> elements with an href that points to a real URL. Avoid hash-based routes because bots ignore fragments. In single-page apps use the History API so the URL changes and the server responds correctly. For pagination or faceted navigation, provide crawlable links and use rel=“next” and rel=“prev” wisely. Infinite scroll should include a ‘load more’ link that loads a real page so bots can fetch more content. Clear anchor tags also improve accessibility. If you need help mid way, our SEO agency Sydney team can guide you.

Common JS Pitfalls to Avoid

Several mistakes stop JavaScript sites from ranking. Don’t block scripts or API calls in robots.txt. Avoid injecting essential content or metadata after a click because bots won’t interact. Hash-only routes confuse crawlers and produce soft 404s. Over-aggressive lazy loading hides text or images until scrolling; bots may never see them. Limit lazy loading to non-critical items and provide noscript fallbacks. Keep your title and meta descriptions in the HTML rather than injecting them with JavaScript. Use meaningful status codes; returning 200 OK for missing pages misleads bots and users.

Testing and Debugging Without Code

You don’t need to be a developer to check JavaScript SEO. Compare the raw HTML with the rendered DOM using your browser’s tools. Disable JavaScript to see what crawlers see in the first phase. Google’s URL Inspection tool in Search Console shows the rendered HTML from Googlebot’s perspective. Compare the live and indexed versions to ensure important content isn’t blocked. The Rich Results Test confirms that structured data injected by JavaScript is visible. Regularly check Search Console to monitor index coverage and fix problems early.

Ship, Monitor, Improve

Launching a JavaScript site is only the start. Use Search Console reports to track how many pages are indexed and which queries bring traffic. Watch for crawling or rendering errors and correct them quickly. Core Web Vitals include Interaction to Next Paint (INP) and Largest Contentful Paint (LCP). INP measures how quickly your page responds to user actions and replaced First Input Delay. LCP records when the largest visible element loads. Heavy scripts slow both metrics, so audit your bundles, defer non-critical code and optimise images. Prioritise fixes on pages that combine large images and complex scripts. Monitoring performance helps you recognise issues before they harm rankings.

Conclusion / Next Steps

JavaScript SEO in 2025 isn’t mysterious. Deliver meaningful HTML first, choose the right rendering model and make sure crawlers can follow your internal links. Test often and monitor Core Web Vitals to keep pages fast and interactive. To see how other businesses solved similar challenges, browse our Case Studies. When you’re ready to get help, Contact us and let’s build a site that shines for users and search engines.

#JavaScriptSEO #SEO #TechnicalSEO #Crawlability #Indexing #Rendering #Hydration #SSR #CSR #SSG #PreRendering #IslandsArchitecture #EdgeSEO #NextJS #React #Nuxt #CanonicalTags #CoreWebVitals #INP #LCP